The Illusion of Scale: What “Good Proxy Quality” Actually Means

The Scraper

Proxy Fundamentals

Every proxy provider claims to have millions of IPs. Some claim billions. They market "the largest ethically sourced residential network in the industry."

And yet, your scraper still gets blocked.

There is a quiet gap between how proxy quality is marketed and how it behaves in production. Most teams don’t realize it until they’re debugging 200 OK responses that contain empty product grids.

The reality: Proxy quality isn’t about pool size. It’s about survivability.

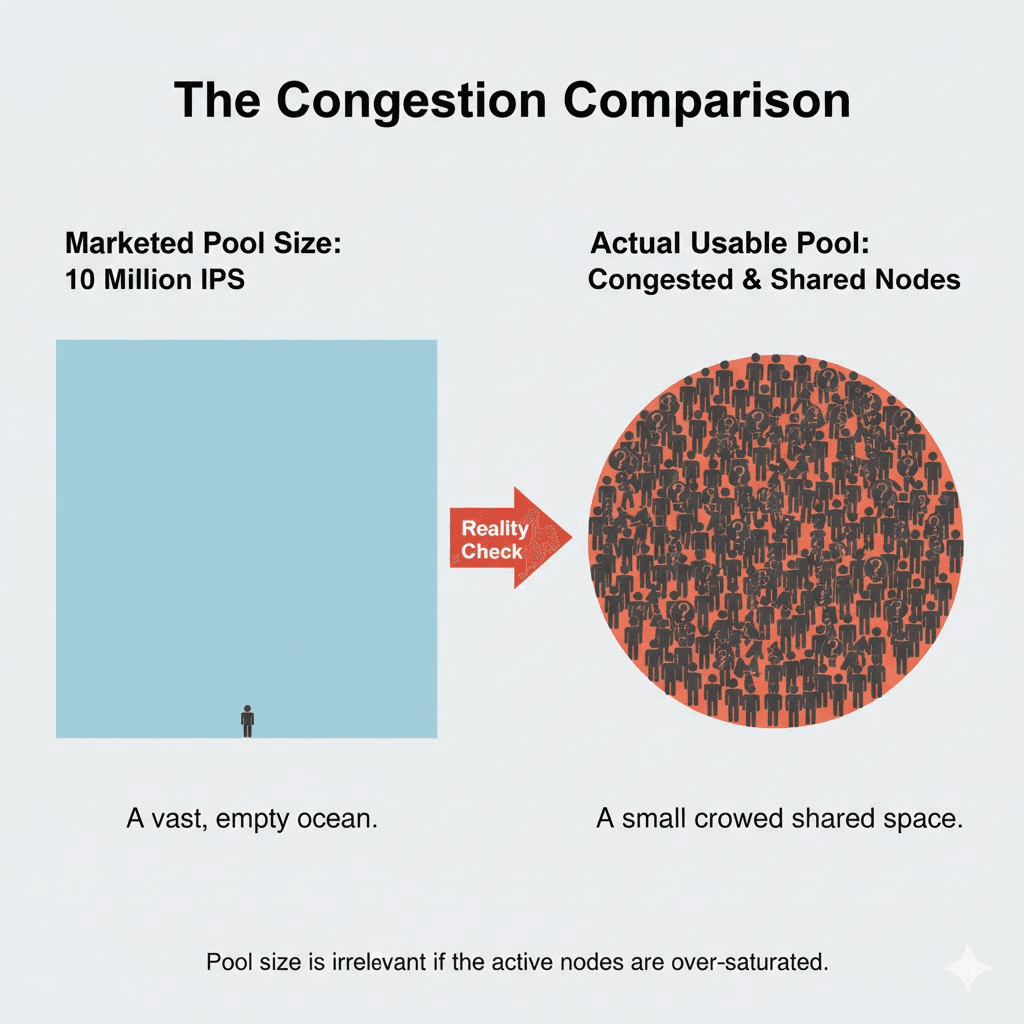

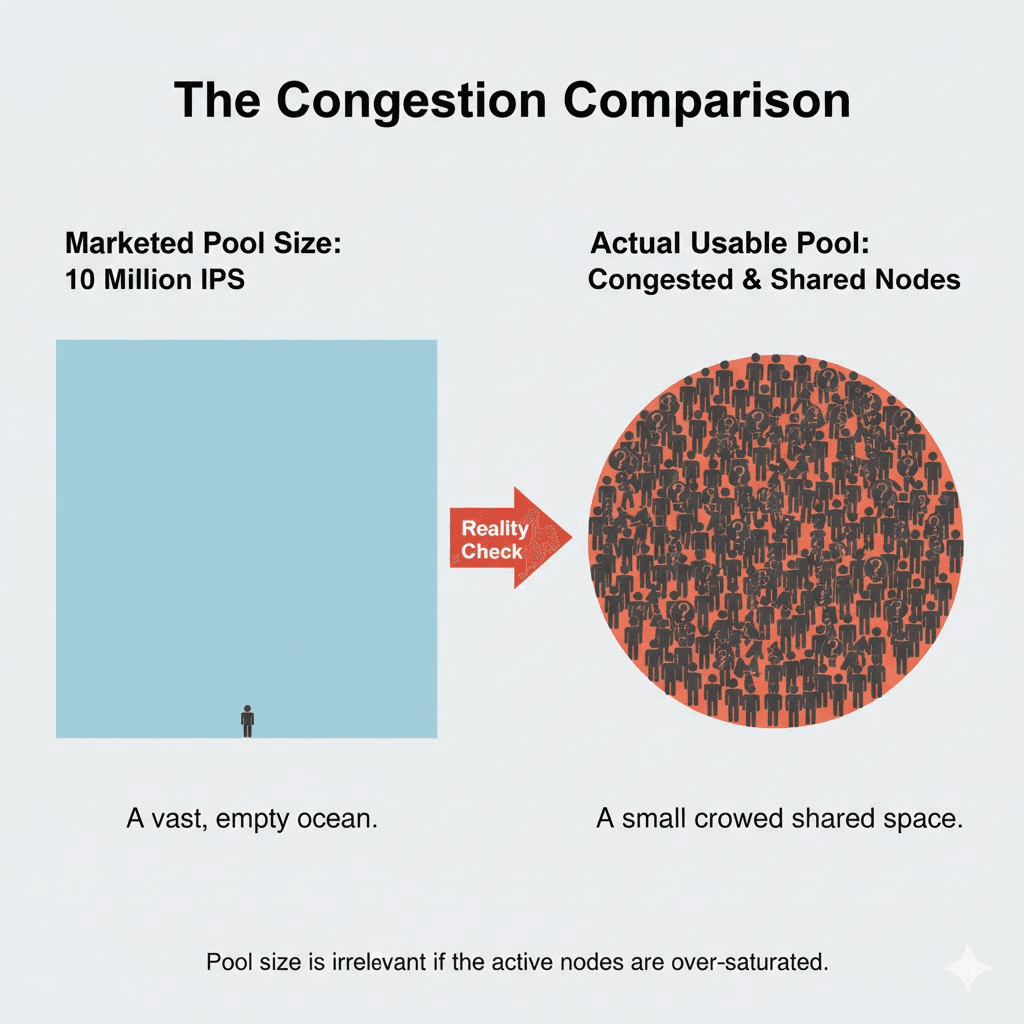

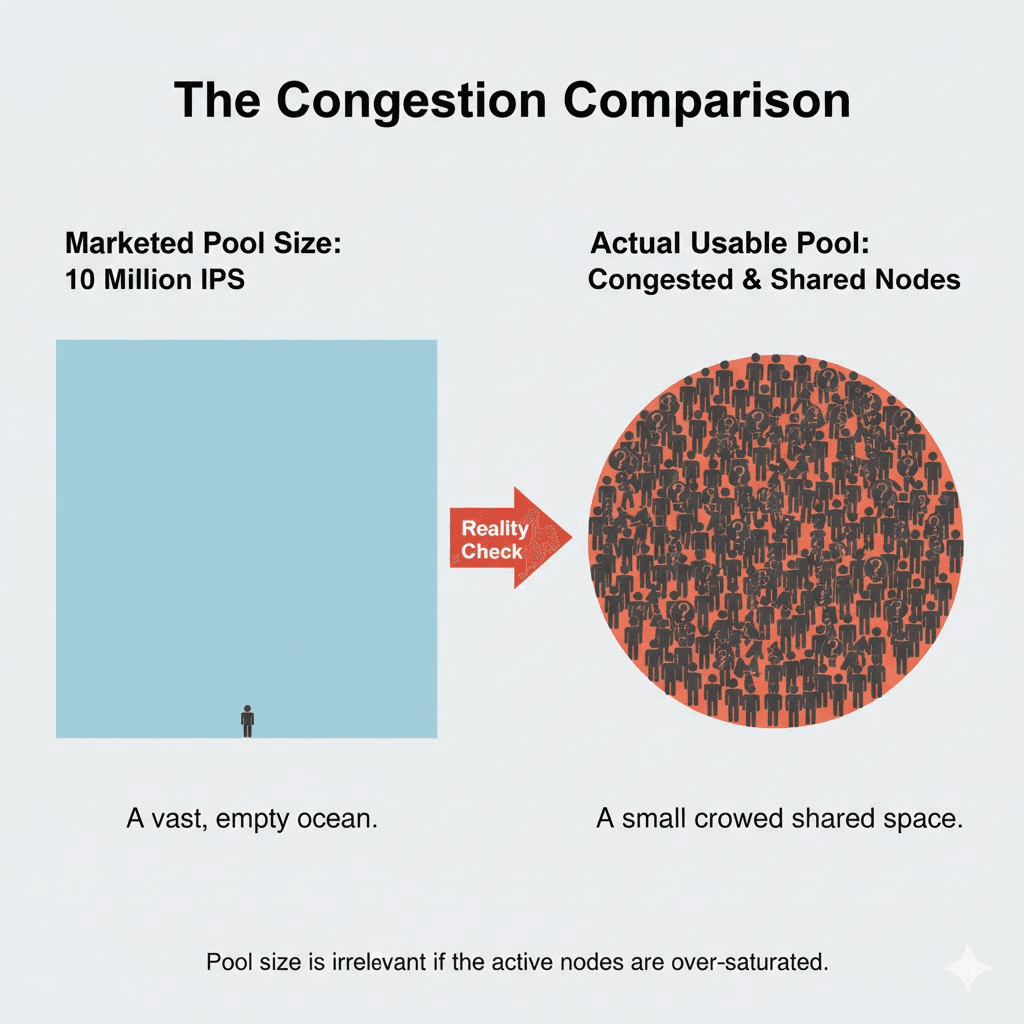

1. The "Millions of IPs" Illusion

A massive pool sounds like diversity. In theory, more IPs mean more escape routes. In practice, pool size is a vanity metric that hides the only question that matters: How much noise are you inheriting?

If 10,000 scrapers are cycling through the same subset of a "million-IP" pool, that pool isn’t large, it’s congested.

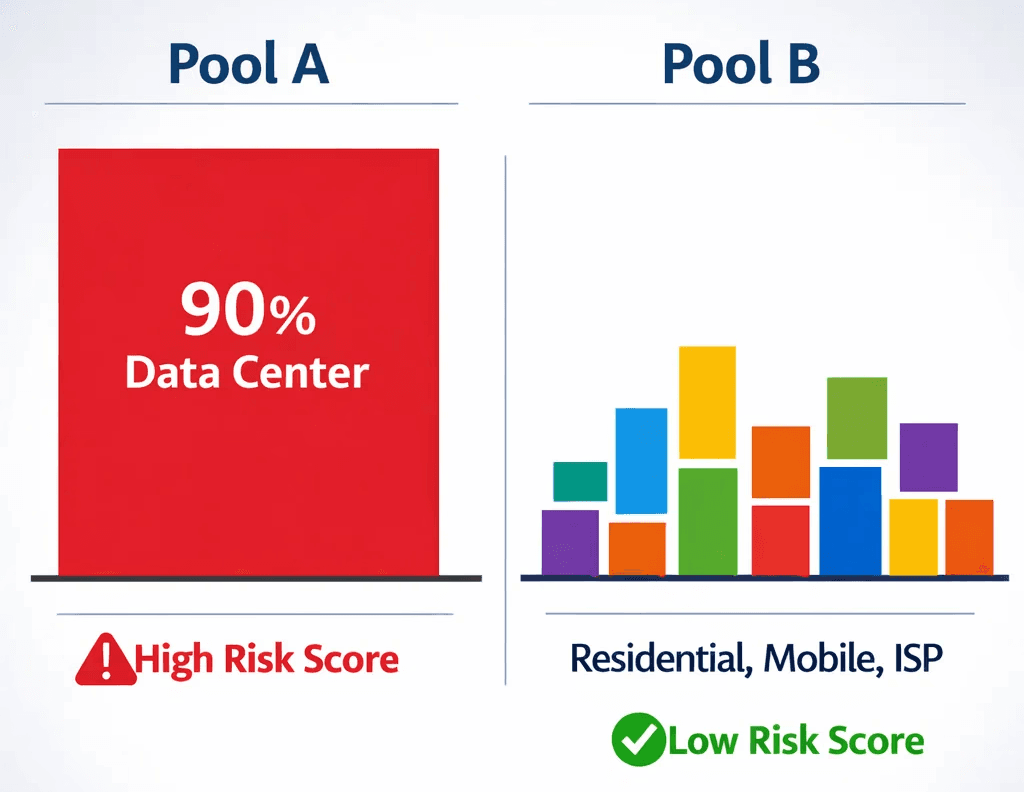

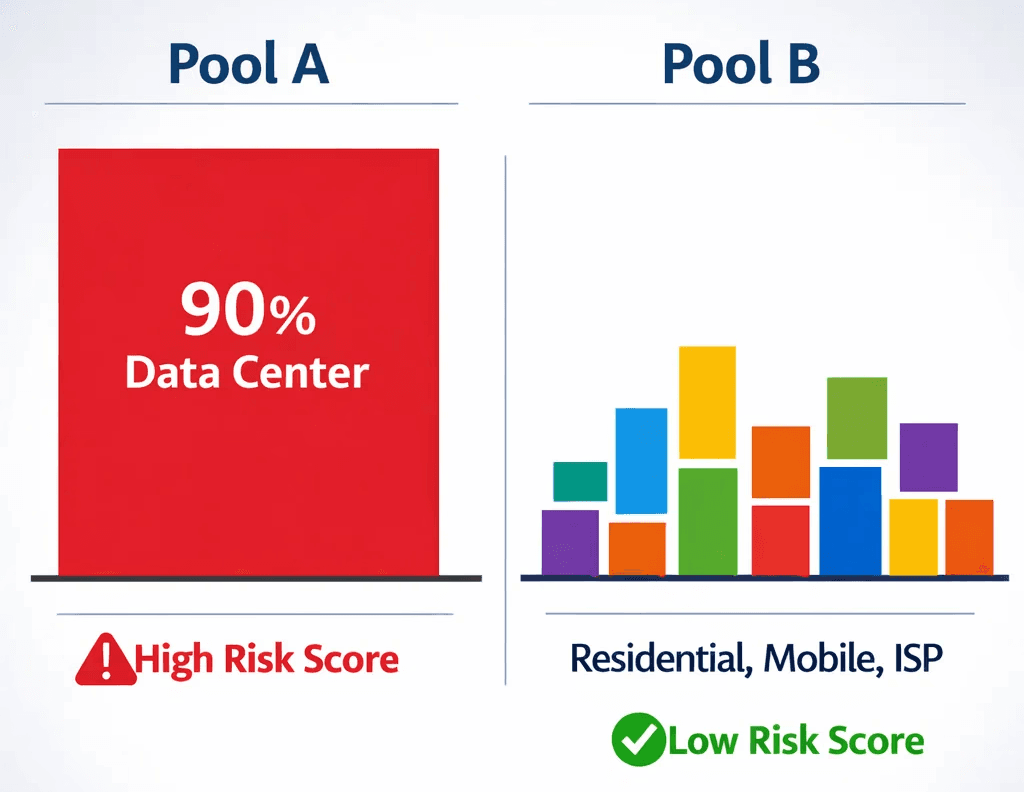

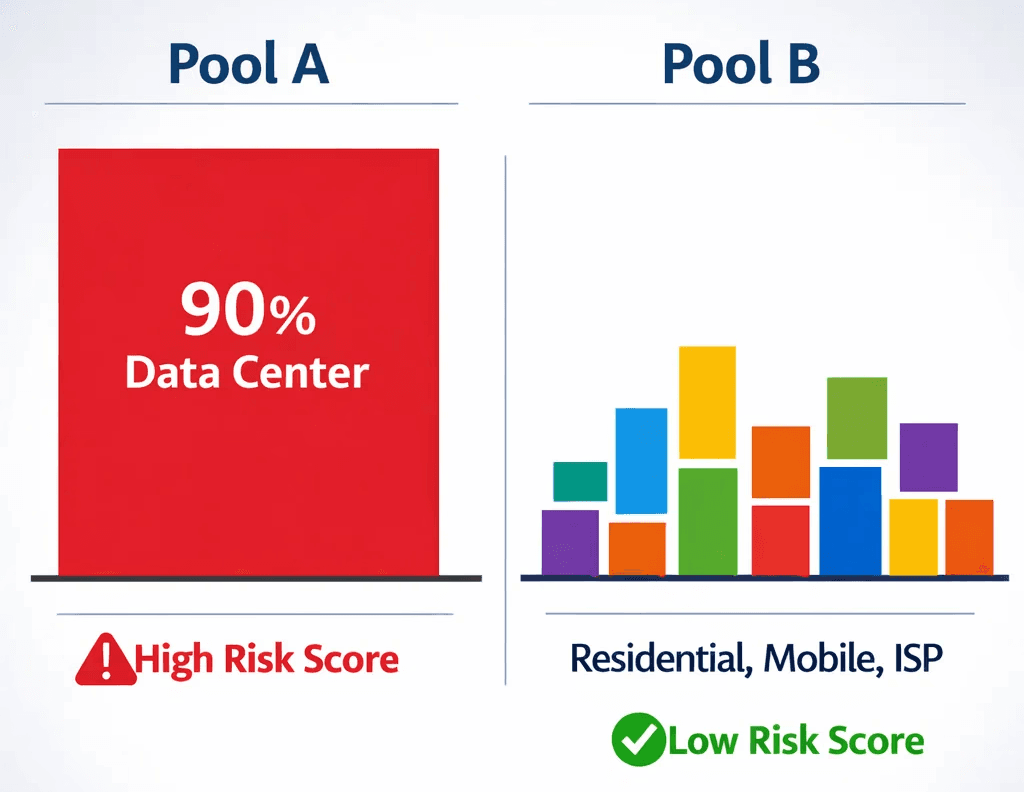

2. ASN Diversity > Raw IP Count

Modern anti-bot systems don't just block IPs; they score Autonomous System Numbers (ASNs). If your proxy pool is concentrated within data center ASNs known for automation, your "fresh" IP is flagged before it sends its first request.

Residential & ISP ranges mirror real human distribution.

ASN Spread ensures that if one segment is throttled, the rest of your fleet remains invisible.

3. The HTTP 200 Trap

The most dangerous metric in scraping is the "Success Rate" based on HTTP codes. In 2026, a 200 OK is a transport metric, not a data metric.

Response Type | HTTP Code | Real Result |

Full Content | 200 OK | Success |

CAPTCHA Wall | 200 OK | Failure |

Empty Grid | 200 OK | Failure |

Soft Block | 200 OK | Failure |

Real proxy quality shows up in Content Completeness. High-quality networks provide stable sessions that don't degrade or drop into "empty" states mid-run.

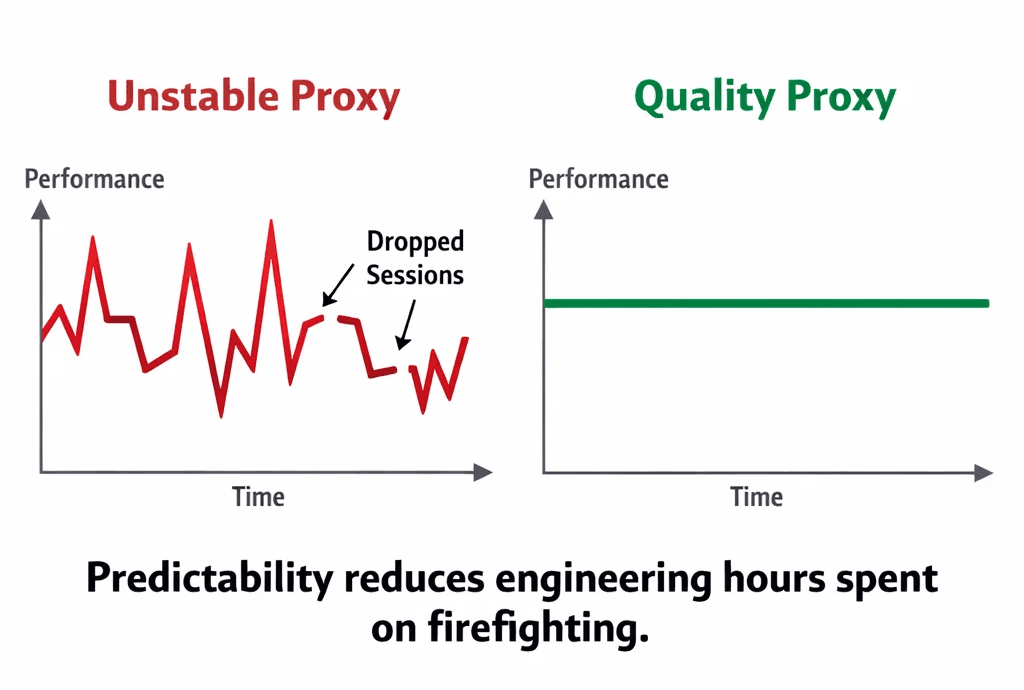

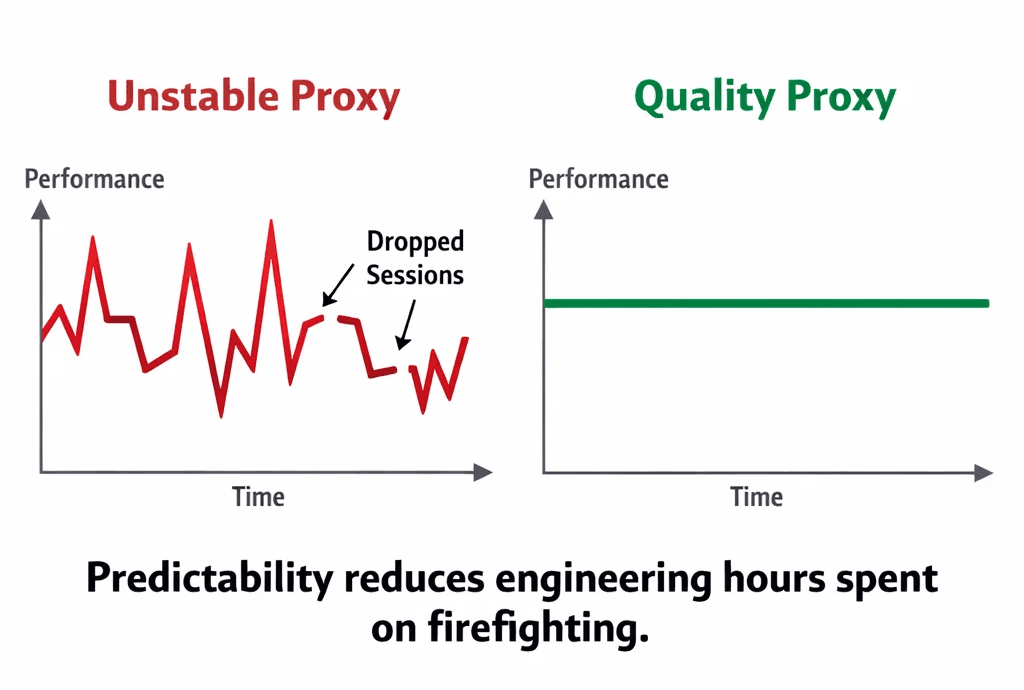

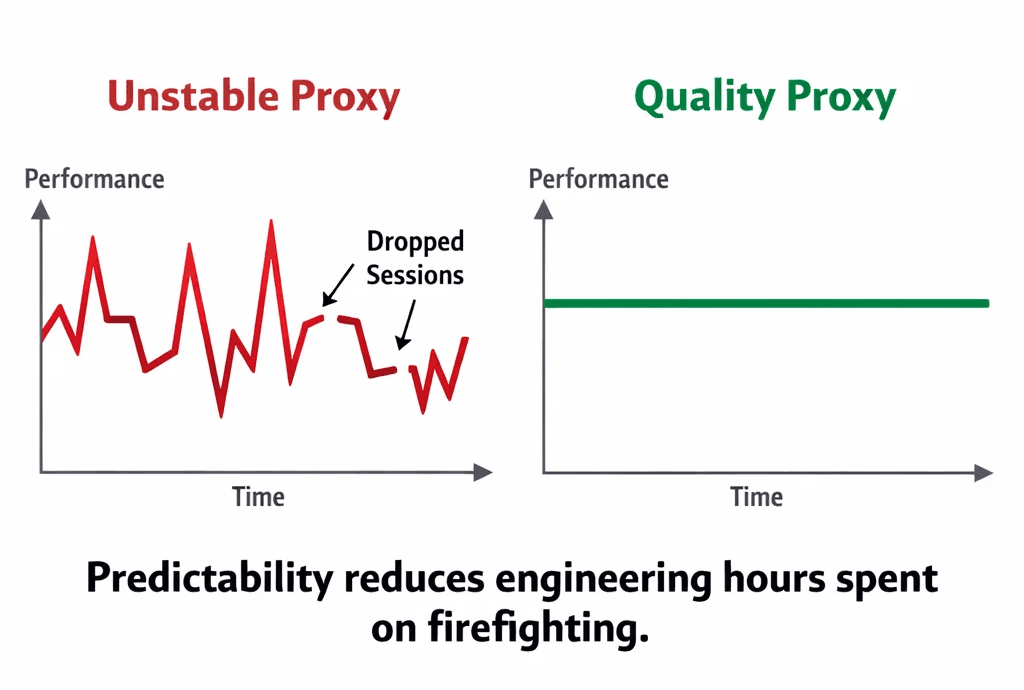

4. Stability: The Real ROI

Engineers often chase low latency, but stability is the real currency. A 120ms stable connection with consistent routing is more valuable than a 40ms IP that drops the session or flips geolocation every three requests.

The Bottom Line

In an era of probabilistic bot detection, brute-force rotation is a relic. Quality, distribution, and reputation hygiene matter more than raw scale.

When you move to high-quality infrastructure, the change isn't flashy:

Retry counts drop.

Data variance shrinks.

Engineers stop firefighting.

If you’re evaluating proxies by how many IPs they advertise, you’re optimizing for a sales deck. If you’re evaluating them by survivability, you’re optimizing for production.

Every proxy provider claims to have millions of IPs. Some claim billions. They market "the largest ethically sourced residential network in the industry."

And yet, your scraper still gets blocked.

There is a quiet gap between how proxy quality is marketed and how it behaves in production. Most teams don’t realize it until they’re debugging 200 OK responses that contain empty product grids.

The reality: Proxy quality isn’t about pool size. It’s about survivability.

1. The "Millions of IPs" Illusion

A massive pool sounds like diversity. In theory, more IPs mean more escape routes. In practice, pool size is a vanity metric that hides the only question that matters: How much noise are you inheriting?

If 10,000 scrapers are cycling through the same subset of a "million-IP" pool, that pool isn’t large, it’s congested.

2. ASN Diversity > Raw IP Count

Modern anti-bot systems don't just block IPs; they score Autonomous System Numbers (ASNs). If your proxy pool is concentrated within data center ASNs known for automation, your "fresh" IP is flagged before it sends its first request.

Residential & ISP ranges mirror real human distribution.

ASN Spread ensures that if one segment is throttled, the rest of your fleet remains invisible.

3. The HTTP 200 Trap

The most dangerous metric in scraping is the "Success Rate" based on HTTP codes. In 2026, a 200 OK is a transport metric, not a data metric.

Response Type | HTTP Code | Real Result |

Full Content | 200 OK | Success |

CAPTCHA Wall | 200 OK | Failure |

Empty Grid | 200 OK | Failure |

Soft Block | 200 OK | Failure |

Real proxy quality shows up in Content Completeness. High-quality networks provide stable sessions that don't degrade or drop into "empty" states mid-run.

4. Stability: The Real ROI

Engineers often chase low latency, but stability is the real currency. A 120ms stable connection with consistent routing is more valuable than a 40ms IP that drops the session or flips geolocation every three requests.

The Bottom Line

In an era of probabilistic bot detection, brute-force rotation is a relic. Quality, distribution, and reputation hygiene matter more than raw scale.

When you move to high-quality infrastructure, the change isn't flashy:

Retry counts drop.

Data variance shrinks.

Engineers stop firefighting.

If you’re evaluating proxies by how many IPs they advertise, you’re optimizing for a sales deck. If you’re evaluating them by survivability, you’re optimizing for production.

Every proxy provider claims to have millions of IPs. Some claim billions. They market "the largest ethically sourced residential network in the industry."

And yet, your scraper still gets blocked.

There is a quiet gap between how proxy quality is marketed and how it behaves in production. Most teams don’t realize it until they’re debugging 200 OK responses that contain empty product grids.

The reality: Proxy quality isn’t about pool size. It’s about survivability.

1. The "Millions of IPs" Illusion

A massive pool sounds like diversity. In theory, more IPs mean more escape routes. In practice, pool size is a vanity metric that hides the only question that matters: How much noise are you inheriting?

If 10,000 scrapers are cycling through the same subset of a "million-IP" pool, that pool isn’t large, it’s congested.

2. ASN Diversity > Raw IP Count

Modern anti-bot systems don't just block IPs; they score Autonomous System Numbers (ASNs). If your proxy pool is concentrated within data center ASNs known for automation, your "fresh" IP is flagged before it sends its first request.

Residential & ISP ranges mirror real human distribution.

ASN Spread ensures that if one segment is throttled, the rest of your fleet remains invisible.

3. The HTTP 200 Trap

The most dangerous metric in scraping is the "Success Rate" based on HTTP codes. In 2026, a 200 OK is a transport metric, not a data metric.

Response Type | HTTP Code | Real Result |

Full Content | 200 OK | Success |

CAPTCHA Wall | 200 OK | Failure |

Empty Grid | 200 OK | Failure |

Soft Block | 200 OK | Failure |

Real proxy quality shows up in Content Completeness. High-quality networks provide stable sessions that don't degrade or drop into "empty" states mid-run.

4. Stability: The Real ROI

Engineers often chase low latency, but stability is the real currency. A 120ms stable connection with consistent routing is more valuable than a 40ms IP that drops the session or flips geolocation every three requests.

The Bottom Line

In an era of probabilistic bot detection, brute-force rotation is a relic. Quality, distribution, and reputation hygiene matter more than raw scale.

When you move to high-quality infrastructure, the change isn't flashy:

Retry counts drop.

Data variance shrinks.

Engineers stop firefighting.

If you’re evaluating proxies by how many IPs they advertise, you’re optimizing for a sales deck. If you’re evaluating them by survivability, you’re optimizing for production.

Author

The Scraper

Engineer and Webscraping Specialist

About Author

The Scraper is a software engineer and web scraping specialist, focused on building production-grade data extraction systems. His work centers on large-scale crawling, anti-bot evasion, proxy infrastructure, and browser automation. He writes about real-world scraping failures, silent data corruption, and systems that operate at scale.